2.1 First Steps with Hugging Face

Contents

2.1 First Steps with Hugging Face#

In the previous chapter, we learned about several fundamental concepts of NLP and developed a first insight into how it all works. In this second chapter, we will see in more detail the Hugging Face libraries and how to use them to tackle many NLP tasks with just a few lines of code. Let’s begin!

What is Hugging Face#

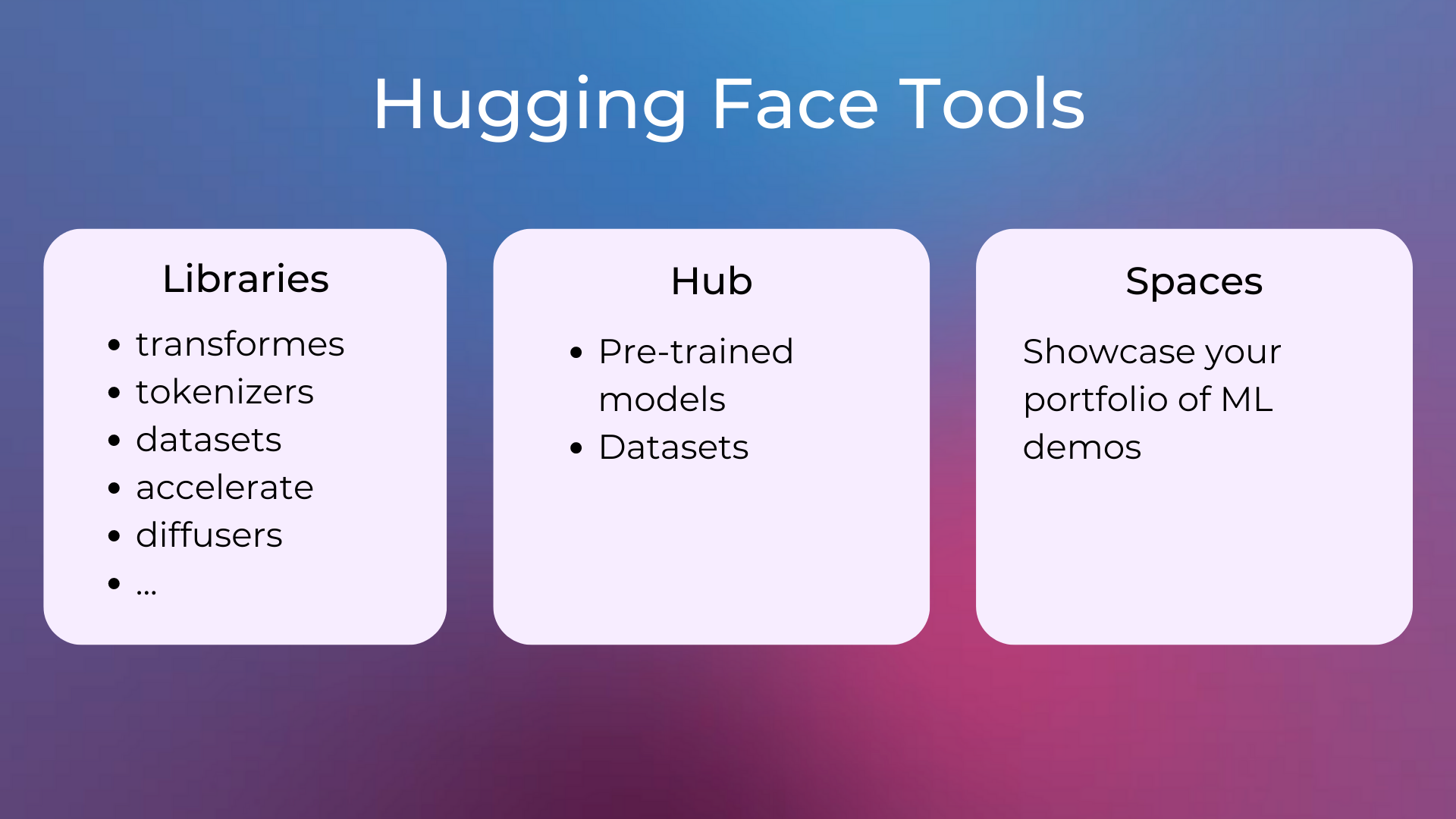

Hugging Face is a company that develops machine learning tools (especially open-source ones) for developers and researchers. It’s particularly famous for its transformers library which provides an easy interface to Transformer models (currently the gold standard for NLP, and working really well in other domains too) and for its platform that allows users to share machine learning models and datasets. Recently they also launched Hugging Face Spaces, which is a simple way of hosting machine learning demos directly on your Hugging Face account.

How Hugging Face Democratized NLP#

Hugging Face has democratized the field of natural language processing by providing easy-to-use tools to help developers and researchers solve complex NLP tasks, leveraging the new state-of-the-art Transformer models.

Indeed, it’s also possible to create and use Transformer models with PyTorch, TensorFlow, or other deep learning frameworks, but their use has been made much easier by the new Hugging Face libraries, effectively democratizing NLP. Hugging Face provides a higher-level interface for working with NLP models, while PyTorch and TensorFlow are lower-level libraries that provide the building blocks for creating machine learning models.

Hugging Face Libraries#

Read this article to see the complete list of libraries and services that Hugging Face is working on. The most popular ones are:

The

transformerslibrary: Provides APIs and easy-to-use functions to utilize transformers or download pre-trained models. The models support tasks from different ML fields like NLP, Computer Vision, Audio, or Multimodal.The

tokenizerslibrary: Provides optimized tokenizers that are often used together with models from thetransformerslibrary.The

datasetslibrary: Provides an easy way of accessing and sharing datasets.The

acceleratelibrary: Easily run PyTorch training and inference on distributed settings.The

diffuserslibrary: Provides pre-trained vision diffusion models, commonly used for text-to-image tasks.

In this course, we’ll mainly deal with the transformers, tokenizers, and datasets libraries.

Hugging Face Hub: Models and Datasets#

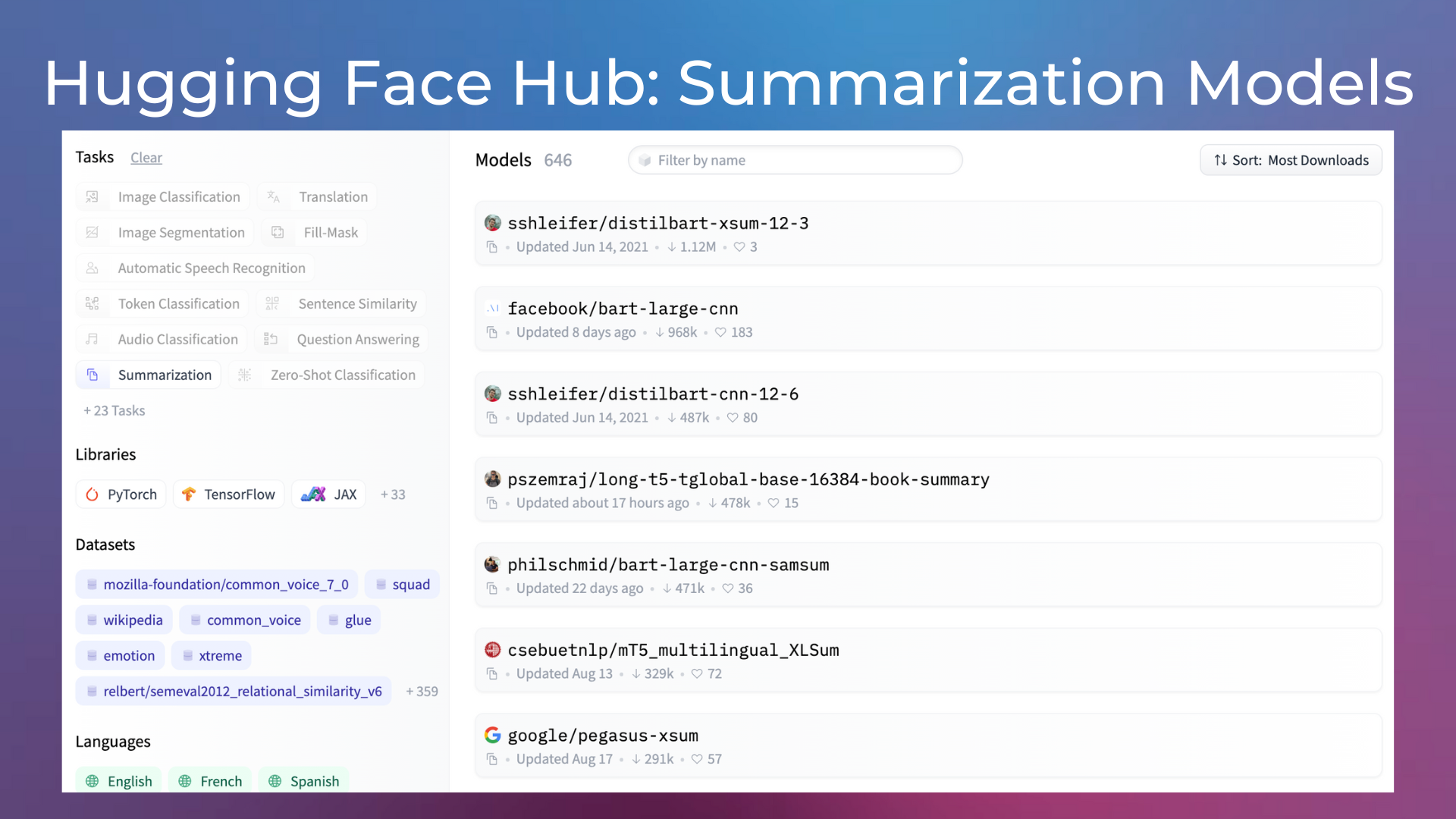

Let’s give a brief look at what the Hugging Face Hub looks like. Open the models page of the Hub and look for text summarization models by using the filters on the left sidebar.

The page will show a list of pre-trained models already finetuned for the text summarization task. Next to each model, you’ll see also its number of downloads. The name of a model is made of two parts, the name of the account that published the model and the name of the model itself. For example, facebook/bart-large-cnn is the model bart-large-cnn published by the Hugging Face account facebook.

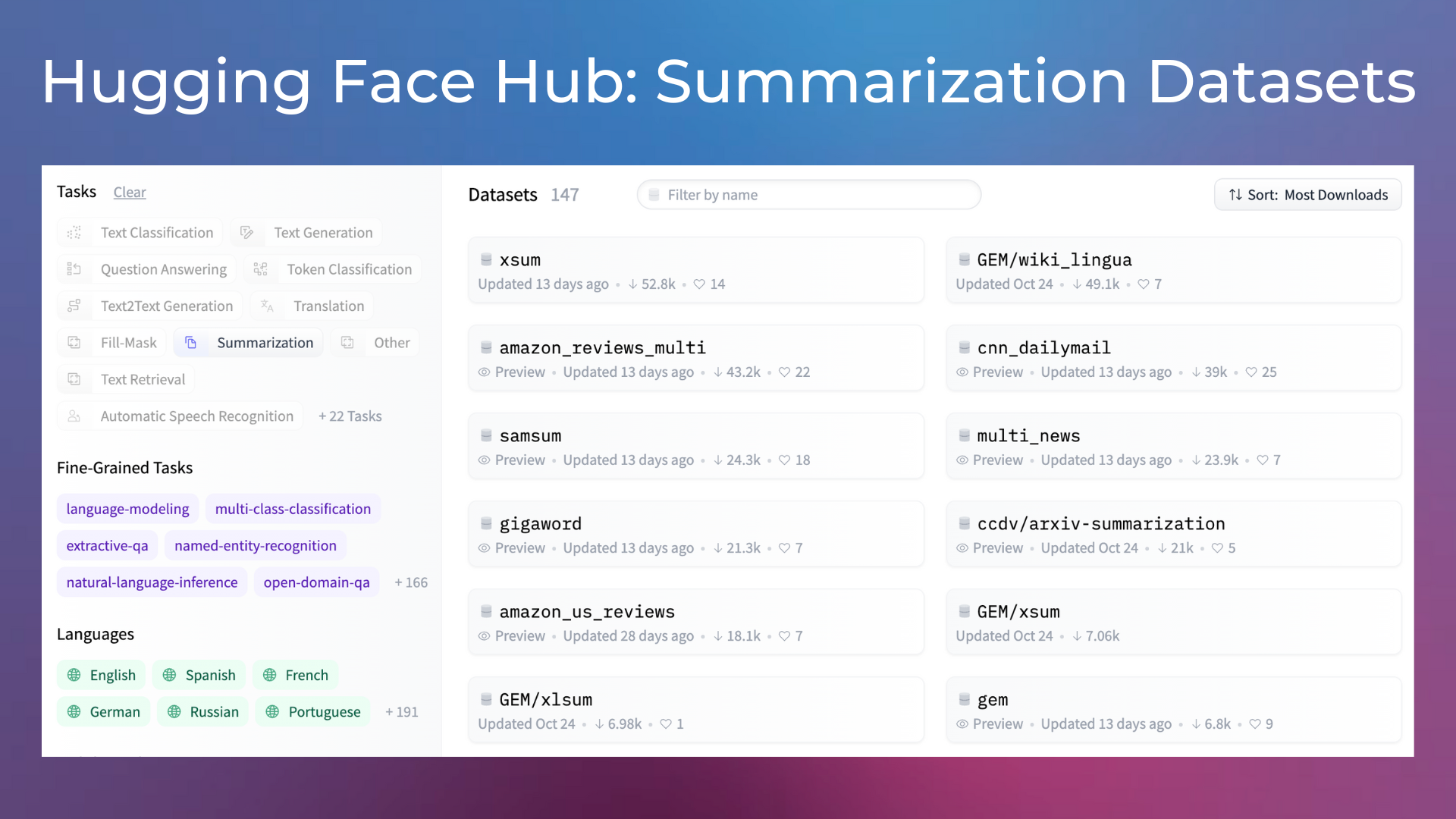

Similarly, open the dataset page of the Hub and look for available datasets for the text summarization task.

You may use these datasets to fine-tune a pre-trained model for your specific task, if there isn’t an open model available for it.

Hugging Face Hub: Tasks#

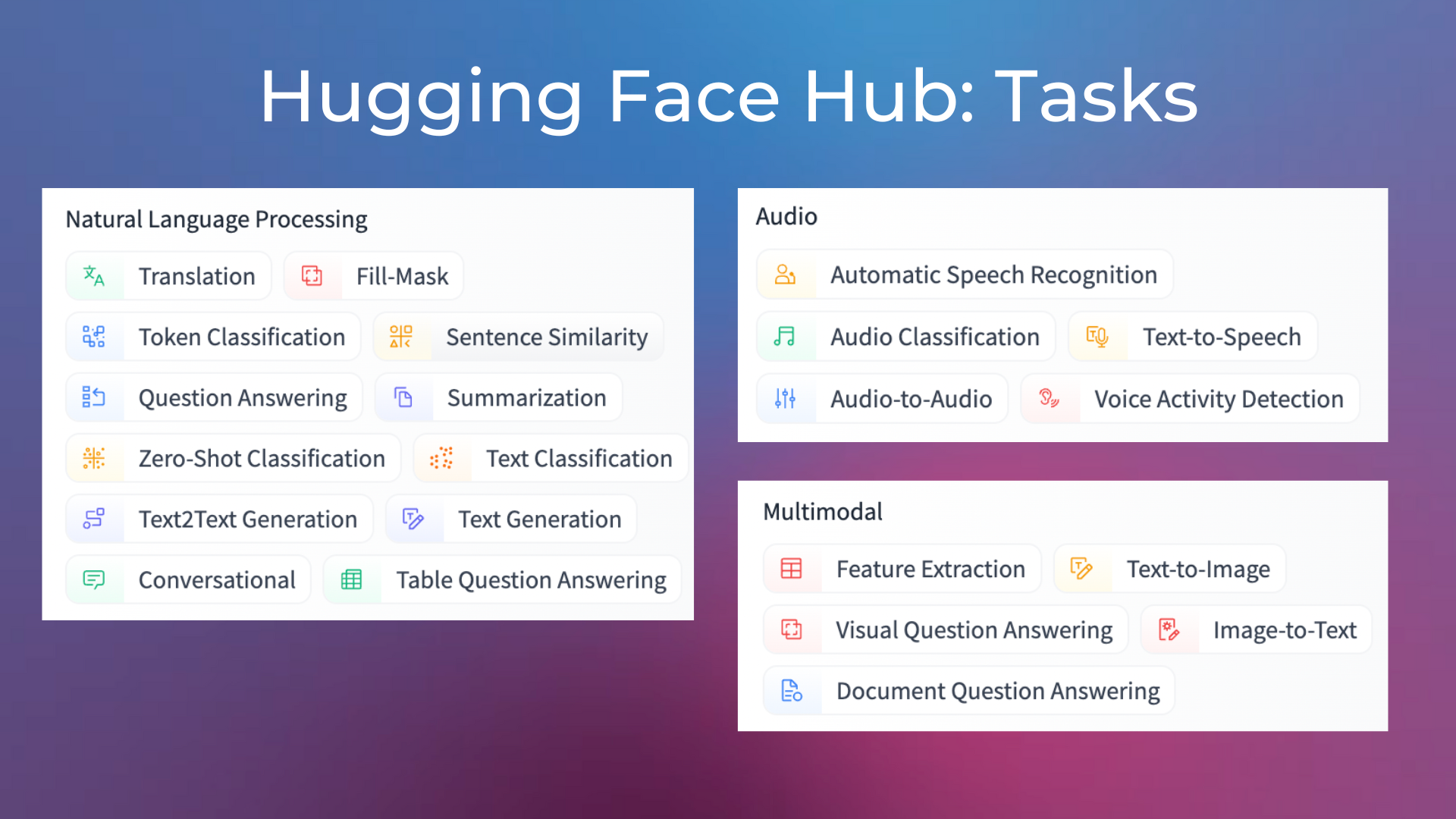

Hugging Face open-source libraries, datasets, and models, make it easy to solve a range of natural language processing tasks. Here’s a list of top-level tasks that can be solved with models from the Hub. Keep in mind that there are Computer Vision and Reinforcement Learning models as well.

On top of them, you’ll find pre-trained models for more specific types of tasks, e.g. for sentiment analysis of tweets, text generation in Italian, etc.

Hugging Face Spaces#

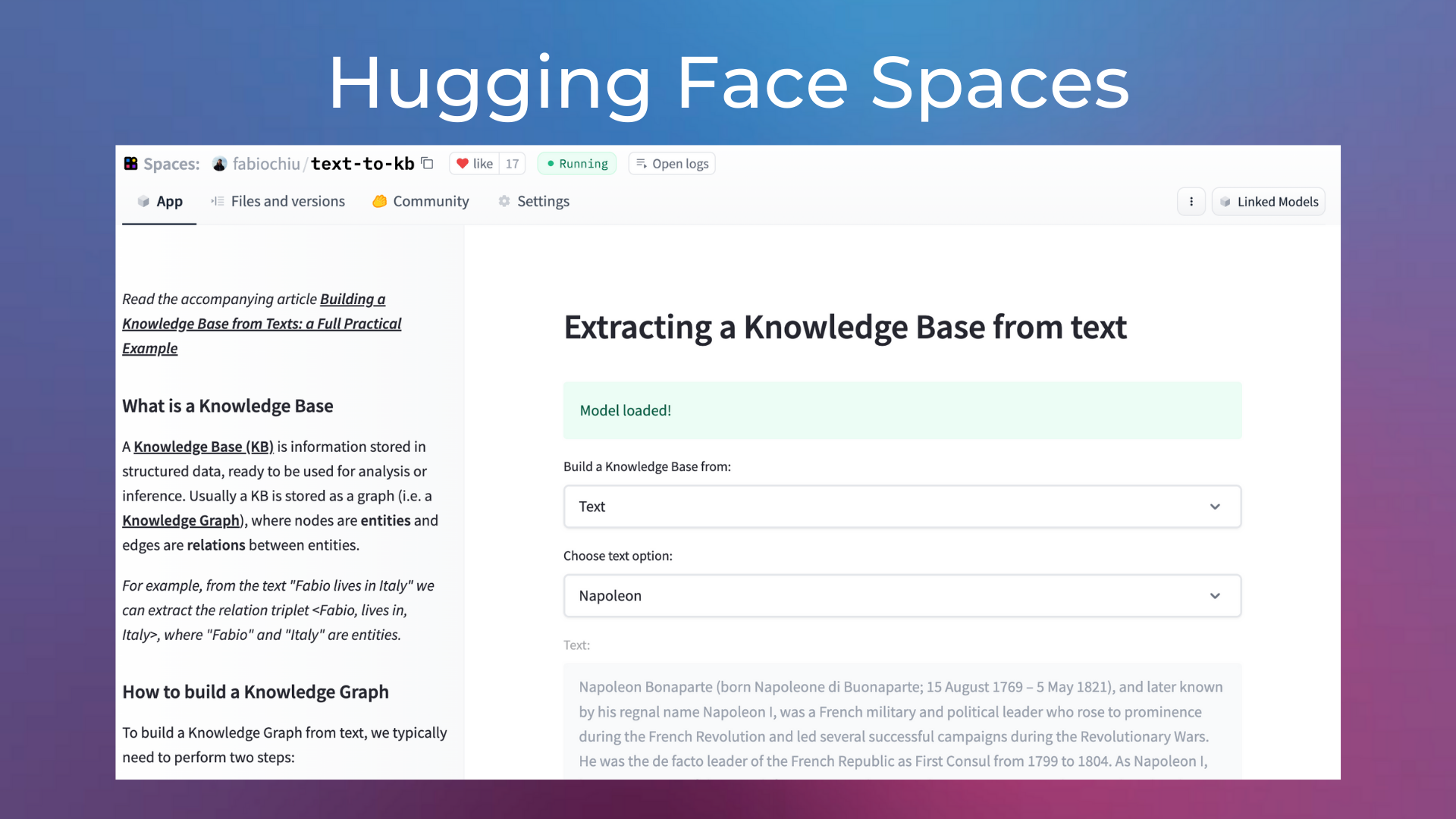

As said before, Hugging Face Spaces lets you host your machine learning demos directly on Hugging Face, making it easy to share your ML portfolio and showcase your projects. Currently, it’s possible to host ML demos built with Gradio, Streamlit, or directly with HTML and JavaScript.

Here’s an example of a Hugging Face Space showcasing how to extract knowledge graphs from documents.

If you are a junior data scientist looking for a job, building a portfolio of ML demos on Hugging Face Spaces is a great idea. Consulting companies may want to use it as well and instruct their salesmen on using these demos.

Quiz#

What’s the Hugging Face library that provides APIs and easy-to-use functions to utilize transformers or download pre-trained models?

transformers.tokenizers.accelerate.

Answer

The correct answer is 1.

Complete the sentence with the correct option. Hugging Face provides a _____ interface for working with NLP models than PyTorch and TensorFlow.

lower-level.

higher-level.

Answer

The correct answer is 2.

True or False. All the models available on the Hugging Face Hub have been trained by Hugging Face.

Answer

The correct answer is False. Everyone can create an account and upload a model on the Hugging Face Hub.

True or False. On the Hugging Face Hub you can find models for NLP tasks only.

Answer

The correct answer is False. There are models for Computer Vision and Reinforcement Learning as well. However, today the majority of models is about NLP.

True or False. Hugging Face Spaces lets you deploy and host your ML demos on Hugging Face, but you need a premium account.

Answer

The correct answer is False. Most of the demos on Hugging Face run on a free tier, using limited CPUs that are ok for most of the demos (they are demos, after all). It’s possible to pay to leverage better hardware (e.g. GPUs).

Questions and Feedbacks#

Have questions about this lesson? Would you like to exchange ideas? Or would you like to point out something that needs to be corrected? Join the NLPlanet Discord server and interact with the community! There’s a specific channel for this course called practical-nlp-nlplanet.