1.5 Stemming, Lemmatization, Stopwords, POS Tagging

Contents

1.5 Stemming, Lemmatization, Stopwords, POS Tagging#

Inflection#

Languages we speak and write are made up of several words often derived from one another. For example, in English the word “eat” becomes “eats” when the subject is in the third-person singular form and becomes “eating” if in the present continuous tense.

When a language contains words that are derived from another word as their use in the speech changes, it’s called Inflected Language.

In grammar, inflection is the modification of a word to express different grammatical categories such as tense, case, tone of voice, person, number, and gender. An inflection expresses one or more grammatical categories with a prefix, suffix or infix, or another internal modification such as a vowel change.

Inflection and Bag of Words#

In the previous lesson, we were classifying texts containing both the words “team” and “teams”, considering them as different words in the same way as the words “team” and “basketball” are. However, we may want to consider “team” and “teams” the same, as they’re the same word with a different inflection after all.

This normalization step is typically done by stemming or lemmatization.

Stemming#

Stemming is the process of reducing inflected words to their stem, i.e. their base or root form. The stem need not be identical to the root form of the word or may not be an existing word in the first place, but it’s usually sufficient that related words reduce to the same stem.

Stemming in Python#

The NLTK library has stemmers built for many languages. A popular stemmer for the English language is the PorterStammer, which performs suffix stripping to produce stems.

The Porter stemmer algorithm does not keep a lookup table for actual stems of each word but applies algorithmic rules to generate stems.

Let’s see how to use it. First, we import the necessary modules.

import nltk

nltk.download('punkt')

from nltk.tokenize import word_tokenize

from nltk.stem import PorterStemmer

The PorterStemmer class can be imported from the nltk.stem module. Then, we instantiate a PorterStemmer object.

stemmer = PorterStemmer()

The function stem then can be used to actually do stemming on words.

print(stemmer.stem("cat")) # -> cat

print(stemmer.stem("cats")) # -> cat

print(stemmer.stem("walking")) # -> walk

print(stemmer.stem("walked")) # -> walk

print(stemmer.stem("achieve")) # -> achiev

print(stemmer.stem("am")) # -> am

print(stemmer.stem("is")) # -> is

print(stemmer.stem("are")) # -> are

cat

cat

walk

walk

achiev

am

is

are

To stem all the words in a text, we can use the PorterStemmer on each token producted by the word_tokenize function.

text = "The cats are sleeping. What are the dogs doing?"

tokens = word_tokenize(text)

tokens_stemmed = [stemmer.stem(token) for token in tokens]

print(tokens_stemmed)

# ['the', 'cat', 'are', 'sleep', '.', 'what', 'are', 'the', 'dog', 'do', '?']

['the', 'cat', 'are', 'sleep', '.', 'what', 'are', 'the', 'dog', 'do', '?']

Stemming with Languages Other Than English#

If we want to do stemming on words from languages other than English, we can use a different stemmer such as the SnowballStemmer, which contains a family of stemmers for different languages. These stemmers are called “Snowball” because their creator invented a programming language with this name for creating new stemming algorithms.

First, let’s import the SnowballStemmer from the nltk.stem.snowball module.

from nltk.stem.snowball import SnowballStemmer

Let’s then print all the languages supported by the SnowballStemmer class.

SnowballStemmer.languages

('arabic',

'danish',

'dutch',

'english',

'finnish',

'french',

'german',

'hungarian',

'italian',

'norwegian',

'porter',

'portuguese',

'romanian',

'russian',

'spanish',

'swedish')

If we want to stem Italian words, we can then create a stemmer for the Italian language this way.

stemmer = SnowballStemmer("italian")

print(stemmer.stem("mangiare")) # -> mang

print(stemmer.stem("mangiato")) # -> mang

mang

mang

Lemmatization#

Lemmatization is used to group together the inflected forms of a word so that they can be analyzed as a single item, i.e. their lemma.

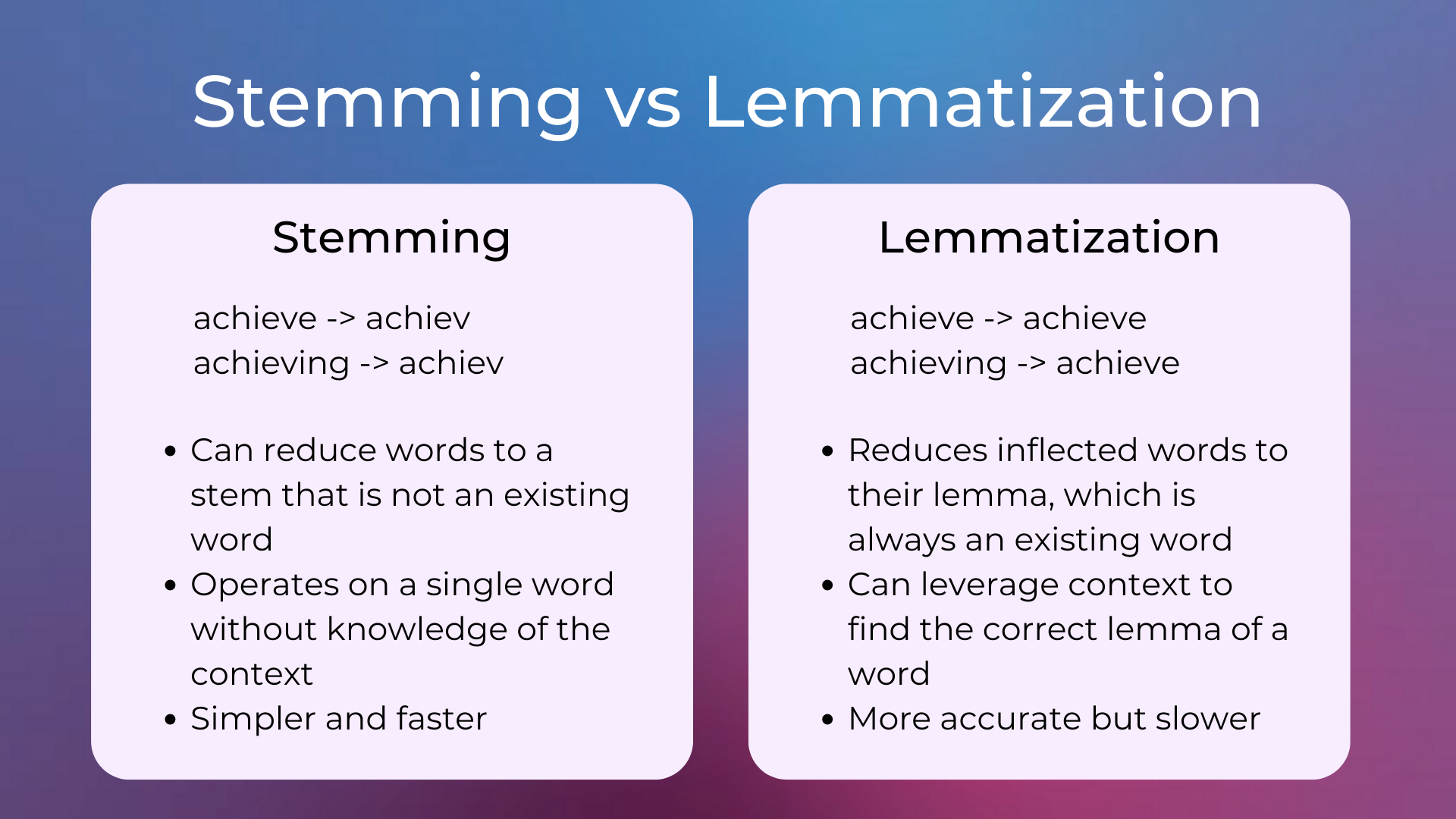

Lemmatization is closely related to stemming, but there are differences:

Lemmatization reduces inflected words to their lemma, which is an existing word. On the contrary, stemming can reduce words to a stem that is not an existing word.

Stemming operates on a single word without knowledge of the context. On the contrary, lemmatization can leverage context to find the correct lemma of a word.

Stemming is faster than lemmatization, therefore it may be more manageable when working on a huge number of texts. On the contrary, lemmatization is slower but more accurate than stemming, making it better for small-to-medium sized corpora.

Lemmatization in Python#

Lemmatization can be done in Python with the NLTK library. Let’s import the necessary modules and classes.

import nltk

nltk.download('wordnet')

nltk.download('omw-1.4')

nltk.download('averaged_perceptron_tagger')

from nltk.tokenize import word_tokenize

from nltk.stem import WordNetLemmatizer

We’ll use the WordNetLemmatizer which leverages WordNet to find existing lemmas. Then, we create an instance of the WordNetLemmatizer class and use the lemmatize method.

lemmatizer = WordNetLemmatizer()

print(lemmatizer.lemmatize("achieve")) # -> achieve

achieve

The lemmatizer is able to reduce the word “achieve” to its lemma “achieve”, differently from stemmers which reduce it to the non-existing word “achiev”.

Stopwords#

Some words, typically called stop-words, are so common in all texts that they add little to none information for tasks like text classification and others. Typical examples are articles and prepositions.

Stopwords in Python#

The NLTK library provides a list of stopwords for many languages. Here’s how to download them.

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

Then, we retrieve the stopwords for the English language with stopwords.words("english"). There are 179 stopwords in total, which are words (note that they are all in lowercase) that are very common in different types of English texts.

english_stopwords = stopwords.words('english')

print(f"There are {len(english_stopwords)} stopwords in English")

print(english_stopwords[:10])

There are 179 stopwords in English

['i', 'me', 'my', 'myself', 'we', 'our', 'ours', 'ourselves', 'you', "you're"]

Stopwords are typically filtered as a preprocessing step before many NLP tasks.

POS Tagging#

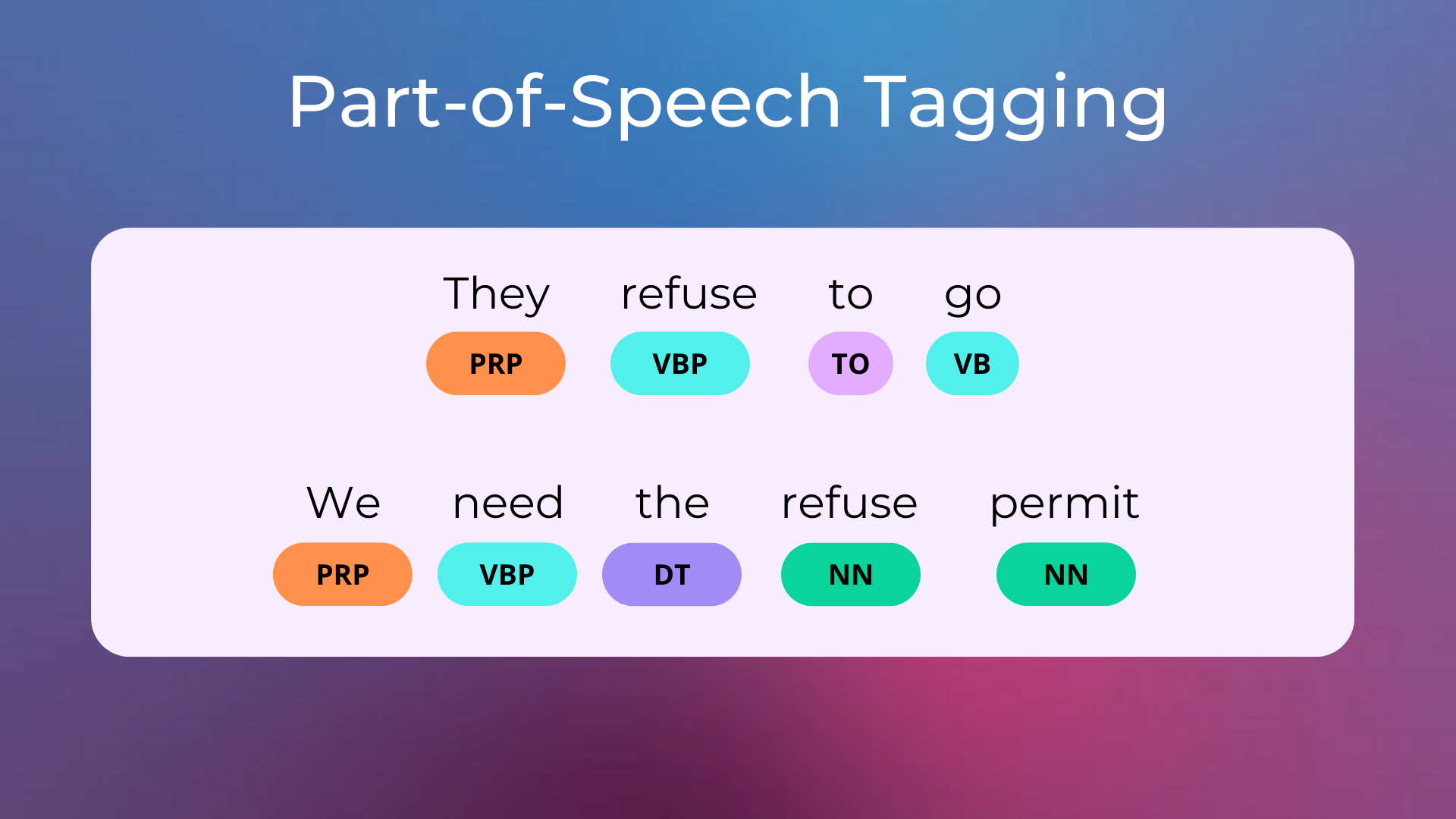

As we have seen with WordNet, there are words whose meanings differ according to their context. For example, the word “refuse” may be a verb (i.e. not willing to do something) and a noun (i.e. waste). Instead of distinguishing its meaning with WordNet and the Lesk algorithm, we may want to simply infer the part-of-speech of the word in the sentence.

The act of inferring the part of speech of words in sentences is called part-of-speech tagging or POS Tagging.

POS Tagging with Python#

The NLTK library provides an easy-to-use pos_tag function that takes a text as input and returns the part-of-speech of each token in the text.

text = word_tokenize("They refuse to go")

print(nltk.pos_tag(text))

text = word_tokenize("We need the refuse permit")

print(nltk.pos_tag(text))

[('They', 'PRP'), ('refuse', 'VBP'), ('to', 'TO'), ('go', 'VB')]

[('We', 'PRP'), ('need', 'VBP'), ('the', 'DT'), ('refuse', 'NN'), ('permit', 'NN')]

PRP are propositions, NN are nouns, VBP are present tense verbs, VB are verbs, DT are definite articles, and so on. Read this article to see the complete list of parts-of-speech that can be returned by the pos_tag function. In the previous example, the word “refuse” is correctly tagged as verb and noun depending on the context.

The pos_tag function assigns parts-of-speech to words leveraging their context (i.e. the sentences they are in), applying rules learned over tagged corpora.

POS Tagging can be used as a preprocessing step for text classification, NER, or also text-to-speech (e.g. the word “refuse” has a different pronunciation if it’s a noun or a verb).

Code Exercises#

Quiz#

What do we mean by inflection when talking about natural languages?

It’s the modification of a word to express different grammatical categories such as tense, case, and gender.

It’s the modification of a word over time to express different meanings.

It’s the tone of voice of a specific word relative to its context.

Answer

The correct answer is 1.

Which of the following reduces words always to a base-form which is an existing word?

Stemming.

Lemmatization.

Answer

The correct answer is 2.

Which of the following can leverage context to find the correct base form of a word?

Stemming.

Lemmatization.

Answer

The correct answer is 2.

Which of the following is typically faster?

Stemming.

Lemmatization.

Answer

The correct answer is 1.

What’s the name of the nltk stemmer class typically used for stemming in languages other than English?

ForeignStemmer.WinterStemmer.SnowballStemmer

Answer

The correct answer is 3.

What’s the name of the function typically used in nltk for extracting the part-of-speech of each token in a text?

nltk.pos_tag.nltk.lemmatize.nltk.get_stopwords.

Answer

The correct answer is 1.

Questions and Feedbacks#

Have questions about this lesson? Would you like to exchange ideas? Or would you like to point out something that needs to be corrected? Join the NLPlanet Discord server and interact with the community! There’s a specific channel for this course called practical-nlp-nlplanet.